Linux draws a distinction between code running in kernel (kernel space) and applications running in userland (user space). This is enforced at the hardware level - in x86-speak[1], kernel space code runs in ring 0 and user space code runs in ring 3[2]. If you're running in ring 3 and you attempt to touch memory that's only accessible in ring 0, the hardware will raise a fault. No matter how privileged your ring 3 code, you don't get to touch ring 0.

Kind of. In theory. Traditionally this wasn't well enforced. At the most basic level, since root can load kernel modules, you could just build a kernel module that performed any kernel modifications you wanted and then have root load it. Technically user space code wasn't modifying kernel space code, but the difference was pretty semantic rather than useful. But it got worse - root could also map memory ranges belonging to PCI devices[3], and if the device could perform DMA you could just ask the device to overwrite bits of the kernel[4]. Or root could modify special CPU registers ("Model Specific Registers", or MSRs) that alter CPU behaviour via the /dev/msr interface, and compromise the kernel boundary that way.

It turns out that there were a number of ways root was effectively equivalent to ring 0, and the boundary was more about reliability (ie, a process running as root that ends up misbehaving should still only be able to crash itself rather than taking down the kernel with it) than security. After all, if you were root you could just replace the on-disk kernel with a backdoored one and reboot. Going deeper, you could replace the bootloader with one that automatically injected backdoors into a legitimate kernel image. We didn't have any way to prevent this sort of thing, so attempting to harden the root/kernel boundary wasn't especially interesting.

In 2012 Microsoft started requiring vendors ship systems with UEFI Secure Boot, a firmware feature that allowed[5] systems to refuse to boot anything without an appropriate signature. This not only enabled the creation of a system that drew a strong boundary between root and kernel, it arguably required one - what's the point of restricting what the firmware will stick in ring 0 if root can just throw more code in there afterwards? What ended up as the Lockdown Linux Security Module provides the tooling for this, blocking userspace interfaces that can be used to modify the kernel and enforcing that any modules have a trusted signature.

But that comes at something of a cost. Most of the features that Lockdown blocks are fairly niche, so the direct impact of having it enabled is small. Except that it also blocks hibernation[6], and it turns out some people were using that. The obvious question is "what does hibernation have to do with keeping root out of kernel space", and the answer is a little convoluted and is tied into how Linux implements hibernation. Basically, Linux saves system state into the swap partition and modifies the header to indicate that there's a hibernation image there instead of swap. On the next boot, the kernel sees the header indicating that it's a hibernation image, copies the contents of the swap partition back into RAM, and then jumps back into the old kernel code. What ensures that the hibernation image was actually written out by the kernel? Absolutely nothing, which means a motivated attacker with root access could turn off swap, write a hibernation image to the swap partition themselves, and then reboot. The kernel would happily resume into the attacker's image, giving the attacker control over what gets copied back into kernel space.

This is annoying, because normally when we think about attacks on swap we mitigate it by requiring an encrypted swap partition. But in this case, our attacker is root, and so already has access to the plaintext version of the swap partition. Disk encryption doesn't save us here. We need some way to verify that the hibernation image was written out by the kernel, not by root. And thankfully we have some tools for that.

Trusted Platform Modules (TPMs) are cryptographic coprocessors[7] capable of doing things like generating encryption keys and then encrypting things with them. You can ask a TPM to encrypt something with a key that's tied to that specific TPM - the OS has no access to the decryption key, and nor does any other TPM. So we can have the kernel generate an encryption key, encrypt part of the hibernation image with it, and then have the TPM encrypt it. We store the encrypted copy of the key in the hibernation image as well. On resume, the kernel reads the encrypted copy of the key, passes it to the TPM, gets the decrypted copy back and is able to verify the hibernation image.

That's great! Except root can do exactly the same thing. This tells us the hibernation image was generated on this machine, but doesn't tell us that it was done by the kernel. We need some way to be able to differentiate between keys that were generated in kernel and ones that were generated in userland. TPMs have the concept of "localities" (effectively privilege levels) that would be perfect for this. Userland is only able to access locality 0, so the kernel could simply use locality 1 to encrypt the key. Unfortunately, despite trying pretty hard, I've been unable to get localities to work. The motherboard chipset on my test machines simply doesn't forward any accesses to the TPM unless they're for locality 0. I needed another approach.

TPMs have a set of Platform Configuration Registers (PCRs), intended for keeping a record of system state. The OS isn't able to modify the PCRs directly. Instead, the OS provides a cryptographic hash of some material to the TPM. The TPM takes the existing PCR value, appends the new hash to that, and then stores the hash of the combination in the PCR - a process called "extension". This means that the new value of the TPM depends not only on the value of the new data, it depends on the previous value of the PCR - and, in turn, that previous value depended on

its previous value, and so on. The only way to get to a specific PCR value is to either (a) break the hash algorithm, or (b) perform exactly the same sequence of writes. On system reset the PCRs go back to a known value, and the entire process starts again.

Some PCRs are different. PCR 23, for example, can be reset back to its original value without resetting the system. We can make use of that. The first thing we need to do is to prevent userland from being able to reset or extend PCR 23 itself. All TPM accesses go through the kernel, so this is a simple matter of parsing the write before it's sent to the TPM and returning an error if it's a sensitive command that would touch PCR 23. We now know that any change in PCR 23's state will be restricted to the kernel.

When we encrypt material with the TPM, we can ask it to record the PCR state. This is given back to us as metadata accompanying the encrypted secret. Along with the metadata is an additional signature created by the TPM, which can be used to prove that the metadata is both legitimate and associated with this specific encrypted data. In our case, that means we know what the value of PCR 23 was when we encrypted the key. That means that if we simply extend PCR 23 with a known value in-kernel before encrypting our key, we can look at the value of PCR 23 in the metadata. If it matches, the key was encrypted by the kernel - userland can create its own key, but it has no way to extend PCR 23 to the appropriate value first. We now know that the key was generated by the kernel.

But what if the attacker is able to gain access to the encrypted key? Let's say a kernel bug is hit that prevents hibernation from resuming, and you boot back up without wiping the hibernation image. Root can then read the key from the partition, ask the TPM to decrypt it, and then use that to create a new hibernation image. We probably want to prevent that as well. Fortunately, when you ask the TPM to encrypt something, you can ask that the TPM only decrypt it if the PCRs have specific values. "Sealing" material to the TPM in this way allows you to block decryption if the system isn't in the desired state. So, we define a policy that says that PCR 23 must have the same value at resume as it did on hibernation. On resume, the kernel resets PCR 23, extends it to the same value it did during hibernation, and then attempts to decrypt the key. Afterwards, it resets PCR 23 back to the initial value. Even if an attacker gains access to the encrypted copy of the key, the TPM will refuse to decrypt it.

And that's what

this patchset implements. There's one fairly significant flaw at the moment, which is simply that an attacker can just reboot into an older kernel that doesn't implement the PCR 23 blocking and set up state by hand. Fortunately, this can be avoided using another aspect of the boot process. When you boot something via UEFI Secure Boot, the signing key used to verify the booted code is measured into PCR 7 by the system firmware. In the Linux world, the Shim bootloader then measures any additional keys that are used. By either using a new key to tag kernels that have support for the PCR 23 restrictions, or by embedding some additional metadata in the kernel that indicates the presence of this feature and measuring that, we can have a PCR 7 value that verifies that the PCR 23 restrictions are present. We then seal the key to PCR 7 as well as PCR 23, and if an attacker boots into a kernel that doesn't have this feature the PCR 7 value will be different and the TPM will refuse to decrypt the secret.

While there's a whole bunch of complexity here, the process should be entirely transparent to the user. The current implementation requires a TPM 2, and I'm not certain whether TPM 1.2 provides all the features necessary to do this properly - if so, extending it shouldn't be hard, but also all systems shipped in the past few years should have a TPM 2, so that's going to depend on whether there's sufficient interest to justify the work. And we're also at the early days of review, so there's always the risk that I've missed something obvious and there are terrible holes in this. And, well, given that it took almost 8 years to get the Lockdown patchset into mainline, let's not assume that I'm good at landing security code.

[1] Other architectures use different terminology here, such as "supervisor" and "user" mode, but it's broadly equivalent

[2] In theory rings 1 and 2 would allow you to run drivers with privileges somewhere between full kernel access and userland applications, but in reality we just don't talk about them in polite company

[3] This is how graphics worked in Linux before kernel modesetting turned up. XFree86 would just map your GPU's registers into userland and poke them directly. This was not a huge win for stability

[4] IOMMUs can help you here, by restricting the memory PCI devices can DMA to or from. The kernel then gets to allocate ranges for device buffers and configure the IOMMU such that the device can't DMA to anything else. Except that region of memory may still contain sensitive material such as function pointers, and attacks like

this can still cause you problems as a result.

[5]

This describes why I'm using "allowed" rather than "required" here

[6] Saving the system state to disk and powering down the platform entirely - significantly slower than suspending the system while keeping state in RAM, but also resilient against the system losing power.

[7] With some handwaving around "coprocessor". TPMs can't be part of the OS or the system firmware, but they don't technically need to be an independent component. Intel have a TPM implementation that runs on the Management Engine, a separate processor built into the motherboard chipset. AMD have one that runs on the Platform Security Processor, a small ARM core built into their CPU. Various ARM implementations run a TPM in Trustzone, a special CPU mode that (in theory) is able to access resources that are entirely blocked off from anything running in the OS, kernel or otherwise.

comments

Linux draws a distinction between code running in kernel (kernel space) and applications running in userland (user space). This is enforced at the hardware level - in x86-speak[1], kernel space code runs in ring 0 and user space code runs in ring 3[2]. If you're running in ring 3 and you attempt to touch memory that's only accessible in ring 0, the hardware will raise a fault. No matter how privileged your ring 3 code, you don't get to touch ring 0.

Linux draws a distinction between code running in kernel (kernel space) and applications running in userland (user space). This is enforced at the hardware level - in x86-speak[1], kernel space code runs in ring 0 and user space code runs in ring 3[2]. If you're running in ring 3 and you attempt to touch memory that's only accessible in ring 0, the hardware will raise a fault. No matter how privileged your ring 3 code, you don't get to touch ring 0. (Blogging this, since this is a recurring anti-pattern I noticed at several customers and often comes up during deployments of 3rd party repositories.)

Update on 2021-02-19: clarified, that Signed-By requires apt >= 1.1, thanks Vincent Bernat

Many upstream projects provide Debian repository instructions like this:

(Blogging this, since this is a recurring anti-pattern I noticed at several customers and often comes up during deployments of 3rd party repositories.)

Update on 2021-02-19: clarified, that Signed-By requires apt >= 1.1, thanks Vincent Bernat

Many upstream projects provide Debian repository instructions like this:

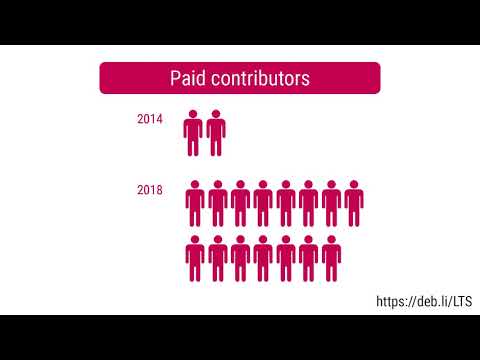

Just over 7 months ago, I

Just over 7 months ago, I

Here is my monthly update covering what I have been doing in the free software world during November 2020 (

Here is my monthly update covering what I have been doing in the free software world during November 2020 (